AWS Auto Scaling: Cost-Saving Best Practices

AWS Auto Scaling is a tool that automatically adjusts resource capacity to match demand, ensuring performance while managing costs. It works across services like EC2, ECS, DynamoDB, and Aurora, requiring no extra cost beyond the resources and monitoring you use.

To make the most of it and save money:

- Use Spot Instances: Save up to 90% compared to On-Demand Instances for flexible workloads like CI/CD pipelines and web servers.

- Combine Spot and On-Demand Instances: Mix instance types to balance cost savings and stability. Understanding how to choose your EC2 instances is critical for this balance.

- Set Smart Scaling Policies: Use target tracking for steady scaling, step scaling for traffic spikes, and predictive scaling for recurring demand patterns.

- Monitor and Optimize: Regularly review scaling reports, track metrics in CloudWatch, and adjust configurations based on usage.

- Automate Savings Plans: Tools like Opsima help manage Reserved Instances and Savings Plans to reduce costs by up to 40%.

AWS Auto Scaling ensures you're only paying for what you need while maintaining performance. By combining smart scaling strategies with consistent monitoring, you can significantly reduce cloud expenses.

AWS Auto Scaling Cost-Saving Strategies Comparison Chart

Use Spot Instances in Auto Scaling Groups

Spot Instances vs. On-Demand Instances

Spot Instances offer the same performance as On-Demand Instances but at a fraction of the cost - up to 90% less. The trade-off? AWS can reclaim these instances with just a two-minute warning when they need the capacity. This makes Spot Instances a great fit for stateless and fault-tolerant applications like containerized workloads, CI/CD pipelines, web servers, and big data processing.

Big names have already reaped the benefits. The NFL has saved over $20 million since 2014, Lyft slashed its monthly compute costs by 75% with minimal code changes, and Salesforce trims over $1 million off its monthly bills by using Spot Instances.

| Instance Purchase Type | Price Point | Availability Guarantee | Best For |

|---|---|---|---|

| Spot Instances | Up to 90% discount | Subject to interruption (two-minute notice) | Stateless, flexible workloads |

| On-Demand Instances | Standard hourly rate | Guaranteed availability | Stateful, inflexible applications |

For even better cost efficiency without compromising performance, consider combining Spot Instances with On-Demand Instances using mixed instance types.

Configure Mixed Instance Types

A smart approach is to mix instance types: keep a small base of On-Demand Instances for stability, then add Spot Instances to handle extra capacity. For example, you could set up 10 On-Demand Instances as your base and use Spot Instances to scale up as needed.

Flexibility is crucial here. Configure your Auto Scaling group to include at least 10 different instance types for each workload. This increases the chances of finding available Spot capacity and minimizes interruptions. Use the price-capacity-optimized allocation strategy to balance cost savings with the risk of interruptions.

To further improve reliability, enable Capacity Rebalancing and distribute your Auto Scaling groups across multiple Availability Zones. This approach taps into deeper capacity pools and improves fault tolerance.

Avoid setting a maximum Spot price. By defaulting to the On-Demand rate, you can reduce premature terminations while still capturing the cost benefits of Spot Instances.

Once you've optimized instance selection, it’s time to focus on implementing smart scaling policies to keep costs under control dynamically.

Set Up Smart Scaling Policies

Dynamic Scaling with CloudWatch Alarms

Target tracking scaling is a straightforward way to maintain desired resource levels. You set a target metric - like 50% average CPU usage - and AWS takes care of the rest. It automatically creates and manages the necessary CloudWatch alarms to keep your capacity aligned with that target. This ensures your resources adjust dynamically to meet demand.

Step scaling, on the other hand, is ideal for handling sudden traffic spikes. It allows you to define specific capacity increments based on metric thresholds. While it provides more control for handling surges, it requires a bit more manual setup compared to target tracking.

Picking the right metrics for your workload is key. For compute-heavy tasks, average CPU usage might be your go-to, while ALB request count could work better for web traffic. If your application is memory-intensive, you can use the CloudWatch agent to track memory usage.

Be sure to enable detailed monitoring and configure instance warmup times. This ensures your scaling decisions are both accurate and responsive.

For cost optimization, set your target tracking value as high as you can while leaving room for unexpected spikes. FinOps experts suggest keeping average CPU utilization between 40% and 70% to strike a balance between performance and efficiency. If you’re using multiple scaling policies - like one for CPU and another for queue depth - Auto Scaling will prioritize the policy that requires the largest capacity to maintain availability.

To further refine your scaling strategy, consider complementing these dynamic policies with predictive and scheduled scaling for cyclical demand patterns.

Predictive and Scheduled Scaling Policies

To manage both costs and performance effectively, combine dynamic scaling with predictive and scheduled scaling policies.

Predictive scaling leverages machine learning to analyze up to 14 days of historical data and forecast your capacity needs for the next 48 hours. It’s ideal for handling recurring patterns, like weekday morning traffic surges or nightly batch processes. Forecasts are updated every six hours using the latest CloudWatch data. While these native features are powerful, it is helpful to understand how they compare to third-party optimization tools.

Start by using predictive scaling in "forecast-only" mode. This lets you evaluate its accuracy before enabling it to actively manage your resources. Predictive scaling works best with at least 24 hours of historical data, though 14 days provides more reliable predictions.

To ensure your resources are ready when demand hits, use the "Pre-launch instances" setting (SchedulingBufferTime). This spins up capacity a few minutes before a forecasted spike, giving instances enough time to warm up. Keep in mind, predictive scaling only handles scaling out; pair it with target tracking to scale down idle resources.

Scheduled scaling is another tool for managing predictable usage patterns. It’s great for fixed schedules, like scaling up for business hours (e.g., 8:00 AM to 6:00 PM, Monday through Friday). You can create cron-based schedules to scale up at 7:45 AM and scale down at 6:15 PM. Before setting up scheduled actions, make sure to remove any conflicting or outdated rules to avoid issues.

| Scaling Type | Best Use Case | How It Works | Data Required |

|---|---|---|---|

| Target Tracking | Standard metrics that scale with load | Automatically maintains a target metric value | None |

| Step Scaling | Aggressive responses to large spikes | Scales in predefined increments based on thresholds | None |

| Predictive Scaling | Cyclical/recurring patterns | Uses ML to forecast capacity needs 48 hours ahead | 24 hours minimum, 14 days optimal |

| Scheduled Scaling | Fixed events and business hours | Scales at specific times using cron rules | None |

Monitor and Optimize Regularly

Review Auto Scaling Activity Reports

Regularly reviewing scaling activity reports is key to spotting inefficiencies and trimming costs. Look for signs of frequent scaling, often referred to as "thrashing", or insufficient scaling. These issues typically point to overly aggressive thresholds or too much idle capacity. By staying on top of these reports, you can avoid paying for resources you don't actually need.

Pay close attention to any "ActiveWithProblems" errors in your reports. These errors indicate that AWS couldn't properly apply your scaling configurations, which can lead to wasted resources. If you're experimenting with predictive scaling, consider starting in "Forecast only" mode. This lets you test how accurate the machine learning predictions are before they begin making live adjustments to your capacity.

Also, check if your scaling metrics and load metrics are in sync. For instance, if CPU utilization doesn't align closely with request count, your scaling might not reflect real demand, leading to overspending. Revisit your minimum and maximum instance limits regularly. As your workload patterns change, these boundaries should be adjusted to prevent over-provisioning.

Once you've reviewed your reports, use real-time data from CloudWatch to make proactive adjustments.

Track Resource Utilization with CloudWatch

Building on your activity report reviews, CloudWatch dashboards help you monitor active resource use and fine-tune your configurations in real time. This ongoing monitoring is critical for keeping your costs in check.

Enable detailed monitoring on your EC2 instances for one-minute data intervals instead of the default five-minute updates. These shorter intervals ensure your scaling policies react quickly and accurately, avoiding decisions based on outdated data. Activate Auto Scaling metrics like GroupInServiceInstances and GroupDesiredCapacity to visualize capacity trends on forecast graphs.

If you're managing mixed instance groups with instance weighting, focus on metrics like GroupInServiceCapacity and GroupTotalCapacity. These metrics reveal the actual compute power in use, rather than just the number of servers.

The AWS Compute Optimizer is another valuable tool. It examines your usage patterns and flags Auto Scaling groups as "idle" if no instances exceed 5% peak CPU utilization or 5 MB/day network usage over a 14-day period. It also offers actionable recommendations, such as switching to Graviton or burstable instance types to save money.

For predictive scaling, monitor the PredictiveScalingMetricPairCorrelation metric to ensure your scaling and load metrics are aligned. A weak correlation suggests your policy might be allocating unnecessary capacity based on incorrect signals. Compare PredictiveScalingLoadForecast with actual load data regularly to catch inaccuracies before they drive up costs.

Lastly, audit your CloudWatch alarms and remove any that are no longer needed. Orphaned alarms can lead to unnecessary charges.

sbb-itb-0f2edab

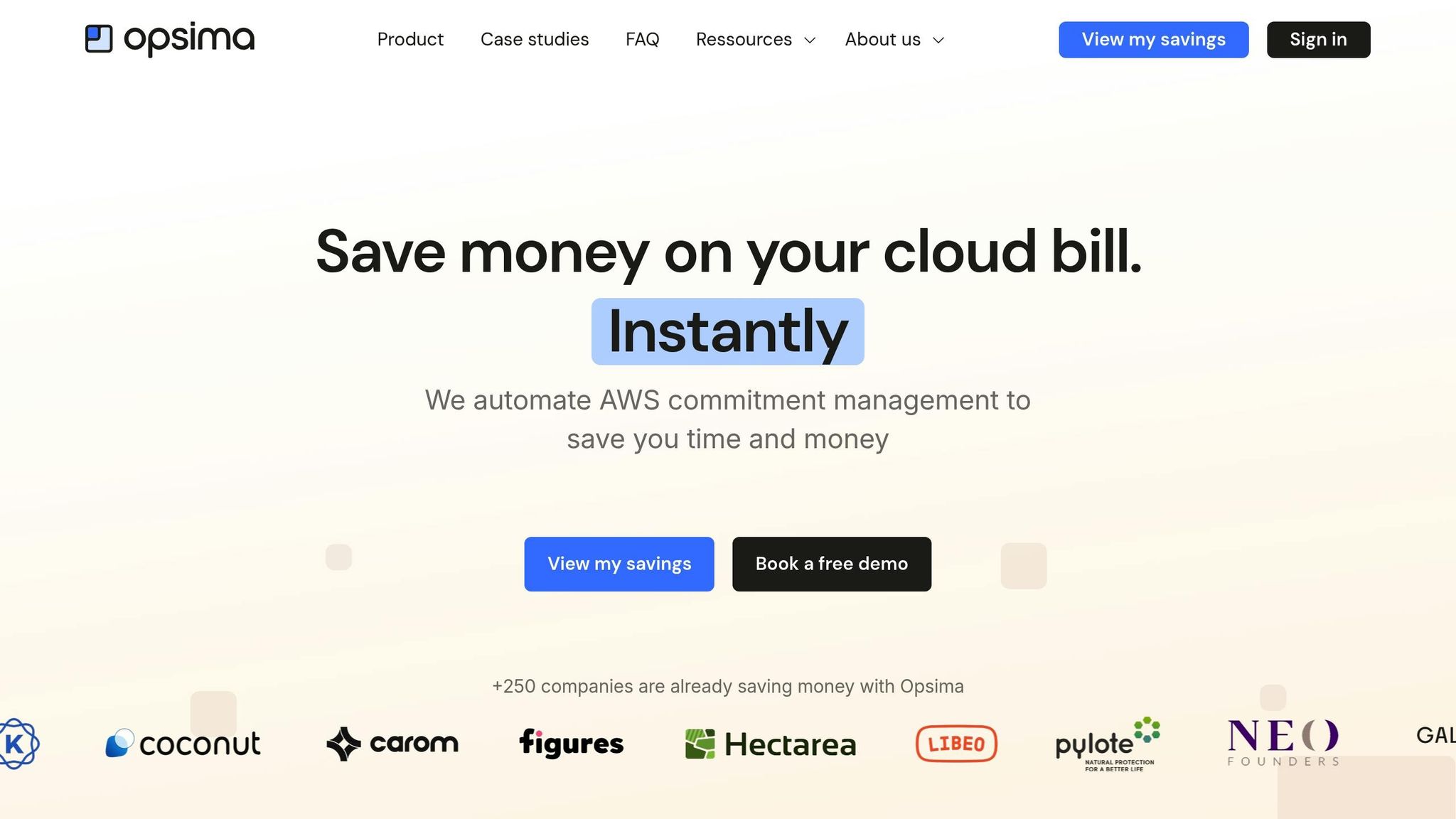

Use Opsima for Automated Commitment Management

How Opsima Reduces AWS Bills by Up to 40%

Keeping track of Savings Plans and Reserved Instances in a constantly changing Auto Scaling environment can feel like a never-ending challenge. It’s no surprise that businesses lose up to 32% of their cloud budgets due to unpredictable workloads and pricing complexities.

Opsima simplifies this process by automating commitment management. It evaluates your usage patterns and market prices to lock in the best combination of Savings Plans and Reserved Instances. This means you’re consistently getting the lowest possible rates for services like EC2, RDS, Lambda, and more.

The platform also keeps a close eye on your baseline capacity - the minimum resources maintained by Auto Scaling groups. Opsima ensures that these essential needs are covered while still allowing your infrastructure to scale up when demand increases.

Benefits of Using Opsima with Auto Scaling

With Opsima, you don’t have to sacrifice flexibility for savings. It applies Compute Savings Plans across different instance families, sizes, and regions, making sure your system can scale dynamically without losing the benefit of committed pricing.

What’s more, Opsima fine-tunes costs across a range of AWS services, including EC2, RDS, Lambda, Fargate, and SageMaker. And while it’s cutting costs, it also keeps your operations secure, so you can focus on growth without worrying about inefficiencies.

Conclusion

Key Takeaways

Effective AWS Auto Scaling practices can transform your cloud environment by improving efficiency and cutting unnecessary costs. At its core, AWS Auto Scaling adjusts capacity to match demand, ensuring you’re not overpaying for idle resources or underprepared for traffic spikes.

For cost-effective workloads, Spot Instances are a great option. Combining Spot and On-Demand Instances helps strike a balance between savings and availability, giving you flexibility without compromising reliability.

When it comes to scaling policies, target tracking ensures steady utilization, such as maintaining CPU usage around 50%. For workloads with predictable traffic patterns, Predictive Scaling steps in to allocate resources ahead of time, so you’re ready for sudden spikes.

"Done right, auto scaling cuts idle spend while protecting latency during spikes. Most teams leave 20–40% on the table through oversized baselines, missing schedules, or mis-tuned thresholds."

Monitoring is critical. Use detailed one-minute CloudWatch metrics to catch load changes as they happen. Regularly reviewing Auto Scaling activity reports is another must - data reveals that nearly 40% of EC2 instances run at under 10% CPU utilization even during peak times.

While scaling policies handle resource allocation, commitment management locks in savings. Tools like Opsima automate commitment management, helping you optimize your mix of Savings Plans and Reserved Instances. The result? Lower AWS bills without sacrificing flexibility or performance. This requires teams to align cloud commitments with real-time usage to avoid over-provisioning.

Getting the most out of AWS Auto Scaling | The Keys to AWS Optimization | S12 E7

FAQs

How can Spot Instances help lower AWS compute costs?

Spot Instances offer a way to slash your compute costs - sometimes by as much as 90% - compared to On-Demand Instances. This is possible because they utilize unused EC2 capacity that AWS provides at steeply discounted rates.

These instances are a great option for cost-conscious users, but they work best for workloads that can tolerate interruptions. Think of tasks like batch processing, data analysis, or applications with flexible requirements. Pairing Spot Instances with AWS Auto Scaling can take your cost savings even further by optimizing resource usage dynamically, helping you make the most of your AWS budget.

How does combining Spot and On-Demand Instances in AWS Auto Scaling help save costs?

Using a combination of Spot Instances and On-Demand Instances within an Auto Scaling group is a smart way to balance cost efficiency with reliability. Spot Instances, which can cost up to 90% less than On-Demand Instances, are ideal for handling non-critical tasks. Meanwhile, On-Demand Instances ensure steady performance for your essential workloads.

This setup helps lower expenses without sacrificing application availability. Auto Scaling takes care of adjusting the instance mix based on factors like pricing and capacity, so your service remains uninterrupted. To save even more, you can pair this strategy with Reserved Instances or Savings Plans for the On-Demand portion.

Tools like Opsima make this process even easier. By tracking your Spot and On-Demand usage, optimizing allocations, and managing commitments, Opsima can help reduce your AWS costs by up to 40%.

How does predictive scaling help save costs with AWS Auto Scaling?

Predictive scaling leverages up to 14 days of historical performance data from Amazon CloudWatch to anticipate hourly resource demands for the next 48 hours. It adjusts capacity in advance of traffic surges, ensuring your applications have the resources required without over-provisioning.

This proactive approach means you won’t pay for unused resources during quieter periods, while still maintaining performance during high-demand times. The result? Lower EC2 costs and better resource efficiency.